At Xodiac, we’ve worked with all kinds of organizations on their OKRs, scrappy startups trying to find product-market fit, massive financial institutions, government agencies wrestling with digital transformation, even a few Fortune 500 companies that thought they had it all figured out. And almost every time, the conversation starts the same way:

“Our OKRs just feel like… busy work.”

You know the drill. Teams dutifully update their dashboards, hit 90% of their targets, and celebrate at the quarterly review meeting. Then someone inevitably asks the uncomfortable question: “So what did we actually accomplish here?”

Crickets.

My colleague, Gino Marckx, has written before about why quarterly OKRs are broken, rigid cycles push teams to measure activity instead of impact. But after sitting through more OKR workshops than I care to count, I’ve realized something: most organizations are solving the wrong problem entirely.

The Problem: We’re Measuring All the Wrong Stuff

Here’s what I hear every quarter in review meetings:

- “We launched 12 marketing campaigns!” (Customer acquisition cost went up)

- “Mobile redesign shipped on time!” (User engagement actually dropped)

- “Interviewed 50 customers!” (Still building features nobody wants)

- “Published 24 blog posts!” (Website conversions are flatlining)

Everyone’s checking boxes and patting themselves on the back while the business metrics that actually matter stay stubbornly stuck.

Look, I get it. Outputs feel good to measure because they’re under your control. You can always write another blog post or ship another feature. But outputs are just the stuff you make. Outcomes are whether that stuff actually changes anything.

The companies that make OKRs work? They’ve figured out how to obsess over outcomes while treating outputs as bets. Some bets pay off, some don’t. The key is knowing which is which before you double down.

When Strategy Meets Reality (And Stumbles)

I watch this happen all the time: leadership crafts these brilliant strategies, everyone nods enthusiastically in the boardroom, then teams go back to their desks and… build the same stuff they were building last quarter.

Three months later, everyone’s confused why the bold vision isn’t happening.

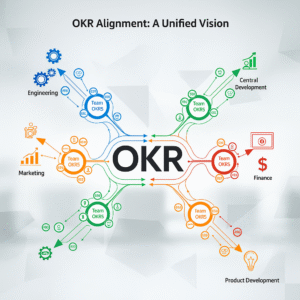

This is where we step in. We built the FACTS framework specifically for this problem:

- Focus: Pick 3-5 outcomes that actually move the needle (not 47)

- Align: Make sure every team’s work ladders up to something that matters

- Commit: Set targets that require new thinking, not just working harder

- Track: Watch both early signals and final results (We’ve also written about it here)

- Stretch: Get comfortable with goals that make you a little nervous

But here’s the thing frameworks don’t solve: teams use the same words to mean completely different things. I learned this the hard way.

I’ll be in a workshop and marketing says “customer engagement.” Sounds great! Then I find out they mean Twitter likes while product thinks it means daily active users. Sales thinks it’s email responses. Everyone’s optimizing for totally different things.

That’s why I started using Clean Language techniques in these sessions. Simple questions that surface what people actually mean: “When you say ‘customer engagement,’ what specifically would tell you it’s working?” Boring? Maybe. But it prevents teams from spending three months solving different problems.

Stop Measuring Theater

I’ve sat through quarterly reviews where teams celebrated hitting 90% of their OKRs while the CEO quietly wondered why revenue was flat. That’s what happens when you optimize for the wrong thing.

Here’s what I mean:

Theater: “Launch onboarding email sequence by Q2”

Reality: “Increase new user activation from 30% to 50%”

Theater: “Complete competitive analysis presentation”

Reality: “Improve win rate against Competitor X from 40% to 55%”

Theater: “Hire 12 engineers this quarter”

Reality: “Cut feature delivery time from 8 weeks to 5 weeks”

The theater version feels productive and looks good in status reports. The reality version forces uncomfortable questions about whether your work actually matters.

John Doerr discusses this in Measure What Matters: OKRs are about results, not checking boxes. But most people miss the point, you need both leading indicators (early warning signs) and lagging indicators (final scoreboard) to know if you’re actually winning. I break this down more in how to align OKRs across departments, but the basic idea is simple: don’t wait until the quarter’s over to find out you failed.

What Actually Works

After years of trial and error, here’s what I do with clients to turn OKRs from paperwork into progress:

Start with the change you want to see. Before anyone talks about campaigns or features or hiring plans, we get specific about what’s different if we succeed. More customers staying longer? Faster product delivery? Higher deal values? Start there, then figure out what might cause that change.

Draw clear lines to strategy. Every OKR should answer: “How does this help us win?” If you can’t connect it to your company’s biggest priorities in one sentence, cut it.

Track early signals. Don’t wait for quarterly results to know you’re in trouble. Pipeline quality predicts revenue. User engagement depth predicts retention. Team velocity predicts delivery speed. Find your early warnings.

Keep it simple. I’ve never seen a company succeed with more than 5 major objectives. Most teams can handle 3-4 key results per objective. Everything else is noise. Here’s a deeper dive on limiting work in progress.

Check in weekly. Monthly reviews are too slow. Fifteen-minute weekly check-ins let teams pivot when things aren’t working.

Question everything. Use Clean Language questions to make sure everyone means the same thing: “What would you see that tells you customer engagement is happening?” “What needs to change for retention to improve?” It sounds basic, but it prevents months of working on the wrong stuff.

A Story from the Trenches

Last year, we worked with a SaaS company whose OKRs were textbook activity theater:

Their OKR: “Release version 2.0 of mobile app with 15 new features by Q2”

They shipped on time. Marketing wrote a press release. Engineering felt accomplished. But mobile adoption stayed terrible and half the features went unused.

Our reframe: “Increase 30-day mobile retention from 40% to 60%”

Suddenly, everything changed. Instead of building features, they started asking why people weren’t sticking around. Turns out the signup process was a nightmare and new users got lost immediately.

So they tried different stuff:

- Fixed the brutal signup flow (tracked completion rates)

- Added contextual help (watched support tickets drop)

- Built usage tracking (saw which features mattered)

- Created progressive onboarding (monitored daily active users)

Result: Retention jumped from 40% to 55% in one quarter. That’s a 37% improvement from changing what they measured, not what they built.

More importantly? The team started thinking like scientists instead of just feature factories. They’d run experiments, see what moved retention, then do more of what worked. Way more fun than just shipping stuff and hoping.

When It Clicks

Companies that figure out outcome-focused OKRs see some pretty obvious changes:

Leadership stops asking “What did you do?” and starts asking “What changed?” Executives can actually see the connection between team effort and business results instead of just nodding through activity reports.

Different departments stop fighting over resources because they’re all optimizing for the same outcomes. Marketing and product start collaborating instead of blaming each other when numbers don’t move.

Teams naturally focus on high-impact work. When success is measured by results, activities that don’t move the needle get dropped automatically.

People feel like their work matters. There’s a huge difference between “I shipped 12 features” and “I increased customer retention by 20%.” One feels like busy work, the other feels like making a dent in the universe.

Innovation speeds up. Teams experiment more boldly when they’re judged on outcomes instead of whether they delivered what they promised in January.

Even Harvard Business Review points out in When OKRs Don’t Work that the biggest failure isn’t the framework; it focuses on outputs instead of outcomes.

Making It Happen

OKRs aren’t broken. We just use them wrong.

I’ve seen them turn into bureaucratic nonsense when teams optimize for looking busy. I’ve also seen them drive breakthrough results when organizations commit to measuring what actually matters and connecting daily work to real outcomes.

The difference isn’t in the methodology; it’s having the discipline to prioritize results over activities, even when activities feel safer to manage.

Here’s where to start:

Check your current OKRs. How many measure outputs versus outcomes? If it’s more than 20% outputs, you’ve got work to do.

Connect everything to strategy. If you can’t draw a straight line from each OKR to your company’s most important priorities, cut it.

Find your early warning signals. Don’t wait for quarterly results to know if you’re succeeding.

Cut your scope in half. Better to make real progress on fewer things than marginal progress on everything.

Review weekly. Make OKRs part of how you run the business, not a quarterly compliance exercise.

Make assumptions explicit. Use Clean Language to ensure teams define success the same way.

Do this consistently, and OKRs stop being busy work. They become the system that turns strategy into execution, and execution into results that matter.

By keeping OKRs on track and following best practices, teams and organizations can implement them successfully, especially when using Xodiac’s FACTS methodology.